Approximately 400 corporate decision makers have been surveyed for their confidence in their own corporate decision-making skills, their opinion of their peers skills and their acceptance of corporate data-driven decision making in general, as well as such being augmented by artificial intelligence. The survey, “Corporate data-driven decision making and the role of Artificial Intelligence in the decision making process”, reveals the general perception of the corporate data-driven environment available to corporate decision maker, e.g., the structure and perceived quality of available data. Furthermore, the survey explores the decision makers’ opinions about bias in available data and applied tooling, as well as their own and their peers biases and possible impact on their corporate decision making.

“No matter how sophisticated our choices, how good we are at dominating the odds, randomness will have the last word” – Nassim Taleb, Fooled by Randomness.

We generate a lot of data and also we have an abundance of data available to us. Data is forecasted to continue to grow geometrically until kingdom come. There is little doubt that it will, as long as we humans and our “toys” are around to generate it. According with Statista Research, in 2021 we expect that a total amount of almost 80 Zetta Bytes (ZB) will have been created, captured, copied or consumed. That number corresponds to 900 years of Netflix viewing or that every single person (ca. 8 billion persons) have consumed 10 TB up-to today (effectively since early 2000s). It is estimated that there is 4.2 billion active mobile internet users worldwide. Out of that, ca. 5% (ca. 4 ZB or about 46 years of Netflix viewing) of the total data is being stored with a rate of 2% of newly generated data. Going forward expectations are a annual growth rate of around 21%. The telecom industry (for example) expect an internet-connected device per square meter, real-time monitoring and sensoring its environment, that includes you, me and your pet. Combined with your favorite smartphone, a super advanced monitoring and data collection devices in its own merit, the resolution of the human digital footprint increase many folds over the next years. Most of this data will be discarded. Though not before relevant metadata have been recorded and decided upon. Not before your digital fingerprint has been enriched and updated, for business and society to use for its strategies and policies, for its data-enriched decision making or possible data-driven autonomous decision making routines.

From a data-driven decision making process, data that is being acted upon can be both stored data as well as non-stored data, that would then be acted upon in real-time.

This amount of existing and newly generated data continues to be heralded as extremely valuable. More often than not, as proof point by referring to the value or turnover of the Big 5, abbreviated FAANG (before Google renamed itself to Alphabet and Facebook to Meta). Data is the new Oil is almost as often placed in presentations and articles on Big Data as Arnold Schwarzenegger in talks on AI. Although, more often than not, presenters and commentators on the value of data forget that the original comparison to oil was, that just like crude oil, data needs to be processed and broken down, in order to extract its value. That value-extraction process, like crude oil, can be dirty and cause primary as well as secondary “pollution” that may be costly, not to mention time-consuming, to get rid off. Over the last couple of years some critical voices have started to question the environmental impact of our obsession with extraction of meaning out of very big data sets.

I am not out to trash data science or the pursuit of meaning in data. Quiet the contrary. I am interested in the how to catch the real gold nuggets in the huge pile of data-dung and sort away the spurries false (deliberate or accidentally faked) signals that leads to sub-optimal data-driven decisions or out-right black pearls (= death by data science).

Clearly, given the amount of data being generated in businesses, as well as in society at large, the perceived value of that data, or more accurately, the final end-state of the processed data (e.g., after selection, data cleaning, modelling, …) and the inferences derived from that processed data, data-driven decision making must be a value-enhancing winner for corporations and society.

The data-driven corporate decision making.

What’s wrong with human-driven decision making? After all, most of us would very firmly declare (maybe even solemnly) that our decisions are based on real data. The challenge (and yes often a problem in critical decision making) is that our brain has a very strong ability (maybe even preference) for seeing meaningful patterns, correlations and relationships in data that we have available to us digitally or have been committed to our memory from past experiences. The human mind have great difficulties to deal with randomness, spurious causality of events, and connectedness. Our brain will try to make sense of anything it senses, it will correlate, it will create coherent narratives of the incoherently observed, and replace correlations with causations to fit a compelling idea or belief. Also, the brain will filter out patterns and anomalies (e.g., like gorillas that crash a basketball game) that does not fit its worldview or constructed narrative. The more out of place a pattern is, the less likely is it to be considered. Human-decision making frequently is based on spurious associations, fitting our worldview or preconceived ideas of a topic, and ignoring any data that appears outside our range of beliefs (i.e., “anomalies”). Any decision-process involving humans will in one way or the other be biased. We can only strive to minimize that human bias by reducing the bias-insertion points in our decision-making process.

A data-driven business is a business that uses available & relevant data to make more optimized and better decisions compared to purely human-driven ones. It is a business that gives more credibility to decisions based on available data and structural reasoning. It is a business that may be less tolerant to emotive and gut-feel decision rationales. It hinges its business on rationality and translating its data into consistent and less uncertain decisions. The data-driven business approaches the co-called “Mathematical Corporation” philosophy where human-driven aspects of decision making becomes much less important, compared to algorithmic data-driven decisions.

It sound almost too good to be true. So it may indeed be too good. It relies very much on having an abundance of reliable, unbiased and trustworthy (whatever that means) data that we can apply our unbiased data processing tools on and get out unambiguous analysis that will help make clear unbiased decisions. Making corporate decisions that are free of interpretation, emotions and biases. Disclaimer: this paragraph was intended to be ironic and maybe a bit sarcastic.

How can we ensure that we make great decisions based on whatever relevant data we have available? (note that I keep the definition of great decision a bit diffuse).

Ideally, we should start with an idea or hypothesis that we want to test and act upon. Based on our idea, we should design an appropriate strategy for data collection (e.g., what statisticians call experimental design), ensuring proper data quality for our analysis, modelling and final decision. Typically after the data collection, the data is cleaned and structured (both steps likely to introduce biases) that make it easier to commit to computing, analysis and possible statistical or mathematical modelling. The outcome of the analytics and modelling provides insights that will be the basis for our data-driven decision. If we have done our homework on data collection, careful (and respectful) data post-processing, understanding the imposed analytical framework, we can also judge whether the resulting insights are statistically meaningful, whether our idea, our hypothesis, is relevant and significant and thus is meaningful to base a decision upon. It seems like a “no-brainer” that the results of decisions are being tracked and fed back into a given company’s data-driven process. This idealized process is depicted in the picture below.

Above depicts a very idealized data-driven decision process, lets call it the “ideal data-driven decision process”. This process may provide better and more statistically sound decisions. However, in practice companies may follow a different approach to searching for data-driven insights that can lead to data-driven decisions. The picture below illustrates an alternative approach to utilizing corporate and societal data available for decision making. To distinguish it from the above process, I will call it the “big-data driven decision process” and although I emphasis big data, it can of course be used on any sizable amount of data.

The philosophy of the “big-data driven decision process” is that with sufficient data available, pattern and correlation search algorithm will extract insights that subsequently will lead to great data-driven decisions. The answer (to everything) is already in the big-data structure and thus the best decision follows directly from our algorithmic approach. It takes away the need for human fundamental understanding, typically via models, of the phenomena that we desire to act upon with a sought after data-driven decision.

The starting point is the collected data available to a business or entity, interested using its data for business relevant decisions. Data is not per se collected as part of an upfront idea or hypothesis. Within the total amount of data, sometimes subsets of data may be selected and often cleaned, preparing it for subsequent analysis, the computing. The data selection process often happens with some (vague) idea in mind of providing backup, or substance, for a decision that a decision-maker or business wants to make. In other instances, companies let pattern search algorithm loose on the collected or selected data. Such algorithms are very good at finding patterns and correlations in datasets, databases and datastores (often residing in private and public clouds). Such algorithmic tools will provide many insights for the data-driven business or decision maker. Based on those insights the decision maker can then form ideas or hypotheses that may support in formulating relevant data-driven decisions. In this process, the consequences of a made decision may or may not be directly measured missing out on the opportunity to close-the-loop on the business data-driven decision process. In fact, it may not even be meaningful to attempt to close-the-loop due to the structure of data required or vagueness of the decision-foundation.

The “big-data-decision driven process” rarely leads to the highest quality in corporate data-driven decision making. In my opinion, there is a substantial risk that businesses could be making decisions that are based on spurious (nonsense) correlations. Falsely believing that such decisions are very well founded due to the use of data- and algorithmic-based machine “intelligence”. Furthermore, the data-driven decision-making process, as described above, have a substantially higher amount of bias-entry points than a decision-making process starting with an idea or hypothesis followed by a well thought through experimental design (e.g., as in the case of our “ideal data-driven decision process”). As a consequence, a business may incur a substantial risk of reputational damage. On top of the consequences of making a poor data-driven business decision.

As a lot of data available to corporations and society at large are generated by humans, directly or indirectly, it is also prone to human foibles. Data is indeed very much like crude oil that need to refined to be applicable to good decision making. The refinement process, while cleaning up data and making it digestible for further data processing, analytics and modelling, also may introduce other issues that ultimately may result in sub-optimal decisions, data-driven irrespective. Thus, corporate decisions that are data-driven are not per definition better than ones that are more human-driven. They are ultimately not even that different after having been refined and processed to a state that humans can actually act upon it. It is important however that we keep in mind that big data tend to have many more spurious correlations and adversarial patterns (i.e., patterns that looks compelling and meaningful but are spurious in nature) than meaningful causal correlations and patterns.

Finally, it is a bit of a fallacy to believe that even if many corporations have implemented big data systems and processes, it means that decision-relevant data exists in abundance in those systems. Frequently, the amount of decision-relevant data is fairly limited and may therefor also increase the risk and uncertainty of data-driven decisions made upon such. The drawback of small data is again very much about the risk of looking at random events that appear meaningful. Standard statistical procedures can provide insights into the validity of small data analysis and assumptions made, including the confidence that we can reasonable assign or associate with such. For small-data-driven decisions it is far better to approach the data-driven decision making process according with ideal process description above, rather than attempting to selected relevant data out of a bigger data store.

Intuition about data.

As discussed previously, we humans are very good at detecting real, as well as illusory (imagined), correlations and patterns. Likewise, so are our statistical tools, algorithms and methodologies we apply to our data. Care must always be taken to ensure that inferences (assumptions) being made are also sensible and supported by statistical theory.

Correlations can help us make predictions of the effect of event may have on another. Correlations may help us to possible understand relationships between events and possibly also their causes (though that one is more difficult to tease out as we will discuss below). However, we should keep in mind that correlation between two events does not guaranty that one event causes the other, i.e., correlation does not guaranty causation. A correlation, simply means that there is a co-relation between X and Y. That is that X and Y behave in a way (e.g., linearly) that a systematic change of X appears to be followed by systematic change of Y. As plenty of examples have shown (e.g., see Tyler Vigen’s website spurious correlations) correlation between two events (X and Y) does not mean that one of them causes the other. They may really not have anything to do with each other. It simply means they co-relate to each other in a way that allow us to infer that a given change in one relates to a given change in the other. Our personal correlation detector, the one between our ears, will quickly infer that X causes Y, after it has establish a co-relation between the two.

Too tease out causation (i.e., action X causes outcome Y) in a statistical meaningful way we need to conduct an experimental design, making appropriate use of randomized setup. It is not at all rare to observe correlations between events that we know are independent and/or have nothing to do with each other (i.e., spurious correlation). Likewise it is also possible having events that are causally dependent while observing a very small or no apparent correlation, i.e., corr(X,Y) ≈ 0, within the data sampled. Such a situation could make us conclude wrongly that they have nothing to do with each other.

Correlation is a mathematical relationship that co-relates the change of one event variable ∆X with the proportional change of another event ∆Y = α ∆X. The degree of correlation between the events X and Y we can define as

with the first part (after the equal sign) being the general definition of a correlation between two random variables. The second part is specific to measurements (samples) related to the two events X and Y. If the sampled data does not exhibit a systematic proportional change of one variable as the other changes the corr(X,Y) will be very small and close to zero. For selective or small data samples, it is not uncommon to find the correlation between two events, where one causes the other, to be close to zero and thus “falsely” conclude that there is no correlation. Likewise, for selective or small data samples spurious correlations may also occur between two events, where no causal relationship exist. Thus, we may conclude that the is a co-relation between the events and subsequently we may also “falsely” believe that there is a causal relationship. It is straightforward to get a feeling for these cautionary issues by simulation using R or Python.

The central limit theorem (CLT among friends) ensures that irrespective of distribution type, as long as the sample size is sufficiently big (e.g., >30) sample statistics (e.g., mean, variance, correlation, …) will tend to be normally distributed. Sample variance of the statistic narrows as the sample size increases. Thus for very large samples, the sample statistic converges to the true statistic (of the population). For independent events the correlation between those events will be zero (i.e., the definition of independent events). CLT tells us that the sample correlations between the independent random events will take the shape of a standardized normal distribution. Thus, there will be a non-zero chance that a sample correlation is different from zero violating our expectation for two independent events. As said, our intuition (and math) should tell us that as the sample sizes increase, the sample variance should narrow increasingly around zero which is our true expectation for the correlation of independent events. Thus, as the size growths, the spread of sampled correlations, that is the spurious non-zero correlation reduces to zero, as expected for a database which have been populated by sampling independent random variables. So all seem good and proper.

As more and more data are being sampled, representing diverse events or outcomes, and added to our big data storage (or database), finding spurious correlations in otherwise independent data will increase. Of course there may be legitimate (causal) correlations in such a database as well. But the point is, that there may also be many spurious correlations, of obvious or much less obvious non-sensical nature, leading to data-driven decisions without legitimate basis in the data used. The range (i.e., max – min) of the statistics (e.g., correlation between two data sets in our data store) will in general increase as the amount of data sets increases. If you have a data set with data of 1000 different events, then you have almost half a million correlation combinations to trawl through in the hunt for “meaningful” correlations in your database. Searching (brute force) for correlations in a database with million different events would result in half a trillion correlation combinations (i.e., approximately half the size of number of data sets squared for large data bases). Heuristically, you will have a much bigger chance of finding a spurious correlation than a true correlation in a big-data database.

Does decision outcome matter?

But does it all matter? If a spurious correlation is persistent and sustainable, albeit likely non-sensical (e.g., correlation between storks and babies born), a model based on such a correlation may still be a reasonable predictor for the problem at hand and be maybe of (some) value … However, would we bet your own company’s fortune and future on spurious non-sensical correlation (e.g., there are more guarantied ways of having a baby than waiting for the stork to bring it along). Would we like decision makers to impose policy upon society based on such conjecture and arbitrary inference … I do not think so … That is, if we are aware and have a say in such.

In the example above, I have mapped out how a data driven decision process could look like (yes, complex but I could make it even more so). The process consist of 6 states (i.e., Idea, Data Gathering, Insights, Consultation, Decision, Stop) and actions that takes us from one state to the other (e.g., Consult → Decision), until the final decision point where we may decide to continue, develop further or terminate. We can associate our actions with likelihood (e.g., based on empirical evidence) of a given state transition (e.g.., Insights → Consult vs Insights → Decision, …) occurs. Typically, actions are not symmetric, in the sense that the likelihood of going from action 1 to action 2 may not be the same as going from action 2 back to action 1. In the above decision process illustration, one would get that for many decision iterations (or over time) we would find ourselves to terminate an idea (or product) ca. 25% of the time, even though the individual transition, Decision → Stop, is associated with a 5% probability. Although, this blog is not about “Markov decision processes” one could associate reward units (i.e., can be negative or zero as well) to each process transition and optimize for the best decision subject to the reward or cost known to the corporation.

Though, let us also be real about our corporate decisions. Most decisions tend to fairly incremental. Usually, our corporate decisions are reactions to micro-trends or relative smaller business environmental changes. Our decision making and subsequent reactions to such, more often than not, are in nature incremental. It does not mean that we, over time, cannot be “fooled” by spurious effects, or by drift in the assumed correlations, that may eventually lead to substantially negative events.

The survey.

In order to survey the state of corporate decision making in general and as it related to data-driven decision making, I conducted a paid surveymonkey.com survey, “Corporate data-driven decision making and the role of Artificial Intelligence in the decision making process”. A total of 400+ responses were collected across all regions of the United States with census for balancing gender and age (between 18 – 70) with an imposed annual household income at US$100k per annum. 70% of the participants holds a college degree or more, 54% of the participants describes their current job level as middle management or higher. The average age of the participants were 42 years of age. Moreover, I also surveyed my LinkedIn.com network as well as my Slack.com network associated with Data Science Master of Science studies at Colorado University, Boulder. In the following, I only present the outcome of the survey based on the surveymonkey.com’s paid survey as this has been sampled in a statistically representative way based on USA census and within the boundaries described above.

Basic insight into decision making.

Just to get it out of the way, a little more than 80% of the respondents believe that gender does not play a role in corporate decision making. Though it also means that a bit less than 20% to believe that men and women either better or worse in making decisions. 11% of the respondents (3 out of 4 women) believes that women are better corporate decision makers. Only 5% (ca. 3 out of 5) believes that men are better at making decisions. An interesting follow research would be looking at decision making under stressed conditions. Though, this was not a focus in my questionnaire.

Almost 90% of the respondent where either okay, enjoy or love making decision related to their business. A bit more than 10% do not enjoy making decisions. There are minor gender difference in the degree of appreciation for decision making but statistically difficult to say whether such are significant or not.

When asked to characterize their decision making skill in comparison with their peers, about 55% acknowledge they are about the same as their peers. What is interesting (but not at all surprising) is that almost 40% believes that they are better in making decisions than their peers. I deliberately asked to judge the decision abilities as “About the same” rather than average but clearly did not avoid the so-called better-than-average effect often quoted in social judgement studies. What this means for the soundness of decision making in general, I will leave for you to consider.

Looking at gender differences in self-enhancement compared to their peers. There are significantly more males believing they are better than their peers than is the case for female respondents. While for both genders 5% believe that they are worse than they peers in making decisions.

Having the previous question in mind, lets attempt to understand how often we consult with others (our peers) before making a business or corporate decision. A bit more than 40% of the respondents frequently consults with others prior to their decision making. In the survey frequently has been defined as 7 out of 10 times or higher. Similarly a bit more than 40% would consult others in about half of their corporate decisions. It may seem a high share that do not seek consultation on half of their business decisions (i.e., glass half empty). But keep in mind we also do make a lot of uncritical corporate decisions that is part of our job description and might not be important enough to bother our colleagues or peers with (i.e., glass half full). Follow up research should explore the consultation of critical business decisions more carefully.

The gender perspective on consulting peers or colleagues before a decision-making moment seem to indicate that men more frequently (statistically significant) seek such consultation than women.

For many of us, out gut-feel plays a role in our decision-making. We feel a certain way about the decision we are about to make. Indeed for 60% of the respondents their gut-feeling was important in 50% or more of their corporate decisions. And about 40% of the respondents was of the opinion that their gut-feel was better than their peers (note: these are not the same ca. 40% believing that they are better decision makers than their peers). When it comes to gut-feeling, its use in decision making and its relative quality compared to peers there is no statistical significant gender difference.

The state of data-driven decision making.

How often is relevant data available to your company or workplace for your decision making?

And when such data is available for your decision-making process how often are you actually making use of it? In other words, how data-driven is your company or workplace?

How would you assess the structure of the available data?

and what about its quality?

Are you having any statistical checks done on your data, assumptions or decision proposals prior to executing a given data-driven decision?

I guess the above outcome might be somewhat disappointing if you are a strong believer in the Mathematical Corporation with only 45% of respondents frequently applying more rigorous checks on the validation of their decision prior to executing them.

My perspective is a bit that if you are a data-driven person or company, assessing the statistical validity of the data used, assumptions made and decision options, would be a good best practice to perfect. However, also not all decisions, the even data-driven ones, may be important enough (in terms of any generic risk exposure) to worry about statistical validity. Even if the data used for a decision are of statistical problematic nature and thus may add additional risk to or reduce the quality of a given decision, the outcome of your decision may still be okay albeit not the best that could have been. And even a decision made on rubbish data have a certain chance of being right or even good.

And even if you have a great data-driven corporate decision process, how often do we listen to our colleagues opinion and also consider that in our decision making?

For 48% of the respondents, the human insight or opinion, is very important in the decision making process. About 20% deem the human opinion of some importance.

Within the statistical significance and margin of error of the survey, there does not seem to be any gender differences in the responses related to the data-driven foundation and decision making.

The role of AI in data-driven decision making.

Out of the 400+ respondents 31 (i.e., less than 8%) had not heard of Artificial Intelligence (AI) prior to the survey. In the following, only respondents who confirmed to have heard about AI previously will be asked question related to AI’s role in data-driven decision-making. It should be pointed out that this survey does not explore what the respondent understand an artificial intelligence or AI is.

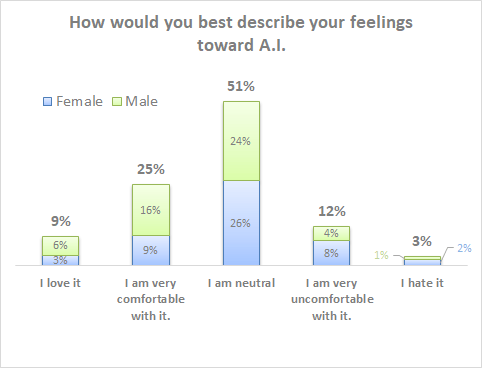

As have been consistent since I started tracking peoples sentiment towards AI in 2017, more women than men appears to have a more negative sentiment towards AI than men. Men, on the other hand, are significantly more positive towards AI than women. The AI sentiment haven’t changed significantly over the last 4 years. Maybe slightly less positive sentiment and a more neutral positioning in the respondents.

Women appear to judge a decision-making optimized AI to be slightly less important for their company’s decision making process. However, I do not have sufficient data to resolve this difference to a satisfactory level of confidence. Though if present may not be surprising due to women’s less positive sentiment towards AI in general.

In a previous blog (“Trust thou AI?”), I described in detail the Human trust dynamic towards technology in general and cognitive systems in particular such as machine learning applications and the concept of artificial intelligence. Over the years the trust in decisions based on AI, which per definition would be data-driven decisions, have been consistently skewed toward distrust rather than trust.

Bias

Bias is everywhere. It is part of life, of being human as well as most things touched by humans. We humans have so many systematic biases (my favorites are: availability bias I see pretty much every day, confirmation bias and framing bias … yours?) that leads us astray from objective rationality, judgement and good decisions. Most of these so-called cognitive biases we are not even aware off, as they work on an instinctive level, particular when decision makers are under stress or time constraints in their corporate decision making. My approach to bias is that it is unavoidable but can be minimized and often compensated, as long as we are aware of it and its manifestations.

In statistics, Bias is relative easy to define and compute

Simply said, the bias of an estimated value (i.e., statistic) is the expected value of the estimator minus the true value of the parameter value being estimated. For an unbiased estimator, the bias is zero (obviously). One can also relate the mean square error minus the variance of the estimator to bias. Clearly, translating human biases to mathematics is a very challenging task if at all possible. Mathematics can help us some of the way (sometimes) but it is also not the solution to all issues around data-driven and human driven decision making.

Bias can be (and more often than not, will be) present in data that is either directly or indirectly generated by humans. Bias can be introduced in the measurement process as well as in data selection and post-processing. Then, in the modelling or analytic phase via human assumptions and choices we make. The final decision-making stage, that we can consider as the decision-thinking stage, the outcome of the data-driven process, comes together with the human interpretation & opinion part. This final stage also includes our business context (e.g., corporate strategy & policies, market, financials, competition, etc..) as well as our colleagues and managers opinions and approvals.

41% of the respondents do believe that biased data is a concern for their corporate decision making. Given how much public debate there has been around bias data and it’s impact on public as well as private policy, it is good to see that a majority of the respondents recognize the concern of biased data in a data-driven decision making process. If we attribute “I don’t know” response to uncertainty and this leads to questioning of bias in data used for corporate decision making, then all is even better. This all said, I do find 31% having no concerns about biased data, a relative high number. It is somewhat concerning, particular for decision makers involved in critical social policy or business decision making.

More women (19%) than men (9%) chose the “I don’t know” response to the above question. It may explain, why fewer women have chosen the ‘Yes’ on “biased data is a concern for decision making” giving maybe the more honest answer of “I don’t know”. This is obviously speculation and might actually deserve a follow up.

As discussed above, not only should the possibility for biased data be a concern to our data-driven decision making. Also the tools we are using for data selection and post-processing may be sources that introduces biases. Either directly, introduced by algorithms used for selection and/or post-processing or indirectly in the human choices made and introduced assumptions to the selected models or analytic frameworks used (e.g., parametrization, algorithmic recipe, etc..).

On the question “Is biased tools a concern for your corporate decision making?” the answer are almost too nicely distributed across the 3 possibilities (“Yes”, “No” and “I don’t know”). Which might indicate that respondents actually do not seem to have a real preference or opinion. Though, more should have ended up in “I don’t know” if really the case. It is a more difficult technical question and may require more thinking (or expert knowledge) to answer. It is also a topic that have been less prominently discussed in media and articles. Though the danger with tooling is of course that they are used as black boxes for extracting insights without the decision maker appreciating possible limitations of such tools.

There seem to be a slight gender difference in the response. However, the differences internally to the question as well as to the previous question around “biased data” is statistically non-conclusive.

After considering the possibility of biased data and biased tooling, it is time for some self-reflection on how biased do we think we are ourselves and compare that to our opinion about our colleagues’ bias in the decision making.

Almost 70% of the respondents, in this survey, are aware that they are biased in their decision making. The remainder either see themselves as being unbiased in their decision making (19%, maybe a bias in itself … blind spot?) or that bias does not matter (11%) in their decision making.

Looking at our colleagues, we do attribute a higher degree of bias to their decisions than our own. The 80% of the respondents think that their colleagues a biased in their decision making. 24% believe that their colleagues are frequently biased in their decisions as opposed to 15% of the respondents in their own decisions. Not surprisingly, we are also less inclined to believe that our colleagues are unbiased in their decisions compared to ourselves.

While there are no apparent gender differences in how the two bias question’s answers are distributed, there is a difference in how we perceive bias for ourselves and for our colleagues. We may tend to see ourselves as less biased than our colleagues. As observed with more respondents believing that “I am not biased at all in my decisions” compared to their colleagues (19% vs 12%) and perceiving their colleagues as frequently being biased in their decisions compared to themselves (24% vs 15%). While causation is super difficult to establish in such survey’s as this one, I do dare speculate that one of the reasons we don’t consult our colleagues on a high amount of corporate decisions may be the somewhat self-inflated image of ourselves being better at making decisions and being less biased than our colleagues.

Thoughts at the end

We may more and more have the technical and scientific foundation for supporting real data-driven decision making. It is clear that more and more data are becoming available to decision makers. As data stores, or data bases, grows geometrically in size and possibly in complexity as well, the human decision maker is either forced to ignore most of the available data or allow insights for the decision-making process to be increasingly provided by algorithms. What is important in the data-driven decision process, is that we are aware that it does not give us a guaranty that decisions made are better than decision that are more human-driven. There are many insertion points in a data-driven decision making process where bias can be introduced with or without the human being directly responsible.

And for many of our decisions, the amount of data available to our most important corporate decisions are either small-data, rare-data or not available. More than 60% of the respondents characterize the data quality they work with in their decision-making process of being Good (i.e., defined as uncertain, directionally ok with some bias nu of limited availability), Poor or Very Poor. About 45% of the respondents states that data is available of 50% or less of their corporate decisions. Moreover, when data is available a bit more than 40% of the corporate decision makers are using it in 50% or less of their corporate decisions.

Compared to the survey 4 years ago, this time around the respondents perception of bias in the decision making process was introduced. About 40% was concerned about having biased data influencing their data-driven decision. Ca. 30% had no concern towards biased data. Asked about biased tooling, only about 35% stated that they were concerned for their corporate decisions.

Of course, bias is not only limited to data and tooling but also to ourselves and our colleagues. When asked for a self-assessment of how biased the respondent believes to be in the corporate decision-making, a bit more than 30% either did not believe themselves to be biased or that bias does not matter for their decisions. Ca. 15% stated that they were frequently biased in their decision making. So of course we often are not the only decision makers around, our colleagues are as well. 24% of the respondents believed that their colleagues were frequently biased in their decisions. Moreover, for our colleagues, 21% (vs 30% in self-assessment) believe that their colleagues are either not biased at all or that bias does not matter for their decisions. Maybe not too surprising when respondents very rarely would self-assess to be worse decision makers than their peers.

Acknowledgement.

I greatly acknowledge my wife Eva Varadi for her support, patience and understanding during the creative process of writing this Blog. Also many of my Deutsche Telekom AG, T-Mobile NL & Industry colleagues in general have in countless of ways contributed to my thinking and ideas leading to this little Blog. Thank you!

Readings

Kim Kyllesbech Larsen, “On the acceptance of artificial intelligence in corporate decision making – A survey”, AIStrategyBlog.com (November 2017). Very similar survey to the one presented here.

Kim Kyllesbech Larsen, “Trust thou AI?”, AIStrategyBlog.com (December 2018).

Nassim Taleb, “Fooled by randomness: the hidden role of chance in life and in the markets”, Penguin books, (2007). It is a classic. Although, to be honest, my first read of the book left me with a less than positive impression of the author (irritating arrogant p****). In subsequent reads, I have been a lot more appreciative of Nassim’s ideas and thoughts on the subject of being fooled by randomness.

Josh Sullivan & Angela Zutavern, “The Mathematical Corporation”, PublickAffairs (2017). I still haven’t made up my mind whether this book describes a Orwellian corporate dystopia or paradise. I am unconvinced that having more scientists and mathematicians in a business (assuming you can find them and convince them to join your business) would necessarily be of great value. But then again, I do believe very much in diversity.

Ben Cooper, “Poxy models and rash decisions”, PNAS vol. 103, no. 33 (August 2006).

Michael Palmer. “Data is the new oil”, ana.blogs.com (November 2006). I think anyone who uses “Data is the new oil” should at least read Michael’s blog and understand what he is really saying.

Michael Kershner, “Data Isn’t the new oil – Time is”, Forbes.com (July 2021).

J.E. Korteling, A.-M. Brouwer and A. Toet, “A neural network framework for cognitive bias”, Front. Psychol., (September 2018).

Chris Anderson, “The end of theory: the data deluge makes the scientific method obsolete“, Wired.com (June 2008). To be honest when I read this article the first time, I was just shocked by the alleged naivety. Although, I later have come to understand that Chris Anderson meant his article as a provocation. “Satire” is often lost in translation and in the written word. Nevertheless, the “Correlation is enough” or “Causality is dead” philosophy remains strong.

Christian S. Calude & Giuseppe Longo, “The deluge of spurious correlations in big data”, Foundations of Science, Vol. 22, no. 3 (2017). More down my alley of thinking and while the math may be somewhat “long-haired”, it is easy to simulate in R (or Python) and convince yourself that Chris Anderson’s ideas should not be taken at face value.

“Beware spurious correlations”, Harvard Business Review (June 2015). See also Tyler Vigen’s book “Spurious correlations – correlations does not equal causation”, Hachette books (2015). Just for fun and provides amble material for cocktail parties.

David Ritter, “When to act on a correlation, and when not to”, Harvard Business Review (March 2014). A good business view on when correlations may be useful and when not.

Christopher S. Penn, “Can causation exist without correlation? Yes!”, (August 2018).

Therese Huston, “How women decide”, Mariner books (2017). See also Kathy Caprino’s “How Decision-Making is different between men and women and why it matters in business”, Forbes.com (2016). Based on interview with Therese Huston. There is a lot of interesting scientific research indicating that there are gender differences in how men and women make decisions when exposed to considerable risks or stress, overall there is no evidence that one gender is superior to the other. Though, I do know who I prefer managing my investment portfolio (and its not a man).

Lee Roy Beach and Terry Connolly, “The psychology of decision making“, Sage publications, (2005).

Young-Hoon Kim, Heewon Kwon and Chi-Yue Chiu, “The better-than-average effect is observed because “Average” is often construed as below-median ability”, Front. Psychol. (June 2017).

Aaron Robertson, “Fundamentals of Ramsey Theory“, CRC Press (2021). Ramsey theory accounts for emergence of spurious (random) patterns and correlations in sufficiently large structures, e.g., big data stores or data bases. spurious patterns and correlations that appear significant and meaningful without actually being so. It is easy to simulate that this the case. The math is a bit more involved although quiet intuitive. If you are not interested in the foundational stuff simply read Calude & Longo’s article (referenced above).

It is hard to find easy to read (i.e., non-technical) text books on Markov chains and Markov Decision Processes (MDP). They tend to adhere to people with a solid mathematical or computer science background. I do recommend the following Youtube videos; on Markov Chains in particular I recommend Normalized Nerd‘s lectures (super well done and easy to grasp, respect!). I recommend to have a Python notebook on the side and build up the lectures there. On Markov Decision Processes, I found Stanford CS221 Youtube lecture by Dorsa Sadigh reasonable passable. Though, you would need to have a good grasp of Markov chains in general. Again running coding in parallel with lectures is recommendable to get hands on feel for the topic as well. After those efforts, you should get going on re-enforcement learning (RL) applications as those can almost all be formulated as MDPs.