Before the GPT-4 moment of March 14, 2023, there has long been an existential need to achieve fully autonomous, unsupervised, or more appropriately self-supervised learning in the machine learning and AI community. To make away with the dependency on supervised learning and the heavy reliance on labeled data often necessitating human involvement. To leapfrog computational scaling from “old-fashion” supervised architectures based on CNNs or RNNs to the unsupervised deep learning regime based on so-called generative adversarial and transformer models of the latest state-of-machine-learning-art. The older supervised machine learning models often performed impressively well on narrow domains and were useless as generalists. Such a model would pretty much be incapable of being used outside the parameter space it had been trained on.

Just a bit before GPT-4 / ChatGPT hit the internet, we had Generative Adversarial Networks (GANs) capturing our imagination with photorealistic human face generation and advanced photo filters making us younger, prettier, or just for fun, much, much older. Allowing you to superimpose your filtered face on top of any other face (“just for fun”) in videos and images. Also, with GAN, the term “deepfake” was coined, covering non-genuine realistic videos and situational pictures that have flooded the internet ever since. As is frequently the case, it started out with Pornography (early technology adaptors being teens maybe? e.g., DeepNude) and then “upgraded” later to Politics, e.g., fake-Obama (Jordan Peele) warning about deepfakes. Unless you ended up at the wrong end of GAN-generated content, most would find it amusing and entertaining.

How good are we at detecting AI-generated content? (assuming one would actually care).

Well, the human ability to detect synthetically generated content is not impressive … To say the least. The work of Sophie Nightingale and co-workers, “Synthetic Faces: how perceptually convincing are they?” from 2021, showed that the baseline average human performance was close to 50:50 (i.e., a simple coin flip) on whether a presented face was believed to be real or fake. With the expected technological improvement, it is likely to become increasingly difficult to distinguish between reality-based and made-up synthetic digital content.

When pictures started appearing on social media 20th of March showing Donald Trump fighting with and being arrested by police, I bet many just for a short moment, believed it to be true. And this was not even a very sophisticated attempt to depict a false (wistful thinking?) narrative. For more of the same category pictures generated by a prolific artist Elliot Higgins follow the hyperlink. Figure 2 below shows one of the pictures that were circulated and the (most obvious) telltale signs of having been generated by an AI.

As has been documented by several (Western) news sources, deepfake videos and other AI-generated content, have been and are used in Russia’s information warfare efforts against Ukraine and the West. In general, the quality has not been very high and relatively easy to detect. Particularly if one is of a suspicious nature. Then … what to trust? Seeing is definitely not believing. However, what you have seen is also not easy to unsee and forget … small seeds of doubt can be laid as the eyes are continuously exposed to a particular false narrative. My Social Psychologist friend Dr. Liraz Margalit, who specializes in behavioral design and decision making, could provide a very exciting tales into the psychology of how our minds could be, and I would be surprised if not already the case, affected by malicious and ill-intended AI-generated content with the specific target of manipulation in one or another direction (e.g., politically, commercially, etc..). You may also take the journey to the end of the document, where you will find the same story generated in the style of Fox News and in the style of CNN News. Lies are bad but often much more obvious than the truth being used to manipulate a particular political or emotional sentiment, bad or good.

As the world we live in, which many of us experience primarily digitally, will be increasingly synthesized… and … “Seeing can no longer be believing”.

Thus, we should be much more suspicious and distrustful about what we are experiencing on digital media such as experienced on various internet browsers that have (or will have) the ability to manipulate and generate synthetic real-world realistic content in real-time. As the synthetic seed has been laid and nourished by clicks and more clicks, it will, with the current architecture of the internet and digital content delivery, be increasingly difficult to trust what is real and what is synthetically generated (i.e., faked). Though, is synthetic content that in detail represents real-world, as described by a reputable journalist, reputable (whatever that means) newspaper, or XGPT application (e.g., ChatGPT as opposed to the transformer generation GPT-n), fake or less credible than the real thing?

After real synthetic sights and sounds came the synthetic word.

How do you interface with Google Search (~80% usage share) or any other internet search engine? I would be surprised if not most of your search queries are done by typing a text in the prompt and getting a list of possible answers ranked by relevance (and other market-based encouragements) and an associated hyperlink that will jump to the material that may be of relevance to your search. It is rather a “dumb”, or at least an inefficient, way to get an answer … would it not be far better if the Search Engine of choice actually understood (or was able to act as it understood) your question and then gave you a comprehensive, trustworthy and correct answer (and by all means add references to that answer for follow up).

Enter OpenAI’s Generative Pre-trained Transformer (GPT) model framework for generalized natural language processing (e.g., knowledge container, translation, “understanding”, …). It is the current technology backbone of Large Language Models (LLM). With “Large” is a pretty big understatement, considering that GPT-1 (released June 2018) had 117 million parameters and the latest GPT-4 (released March 2023) is estimated to have 1 trillion parameters. In comparison the human brain contains about 100 billion neurons where-off 16 billion in the cerebral cortex, which is the part of the brain responsible for cognition.

Generative models are probabilistic models that can generate representative outcomes from observed data used in the training process. It is important to understand that such models can generate outcomes that were not explicitly in the data, as likelihood estimates can be assigned to outcomes not represented by the training data. A pre-trained model is easy to understand as it simply means that the new (generational) model takes its starting point in the previous. This is very similar to transfer learning, which uses an existing model’s parameters as a starting point for training on new data. This has proven to work very well in practice as long as the context of the transferred model is similar to that of the new one. For comprehensive language models with a huge amount of parameters (e.g., hundreds of billions for GPT-3 and allegedly a trillion for GPT-4), having a pre-trained model to start from provides an enormous saving in computing resources (incl. time). Finally, the way humans process reading and writing is sequential. The transformer (i.e., transformer neural network architectures) addresses language in a sequential manner and extracts syntax and expected contexts. It is important to understand that a transformer does not understand (hehe) in the same way a human would do (supposedly). The transformer assigns likelihoods to context possibilities given the syntax (very simplistically put and possibly not completely correct) and presents the most likely context to the question posed. The attentive reader should realize that this also leaves the possibility of being presented with false “facts” or incorrect answers. Imagine that the addressable context is related to a recent event or new information that the transformer should assign a higher weight in providing a factual answer. However, the GPT model had not yet been presented with these events necessary for it to provide a more factual and truer answer. Though, the timing effect is obviously a generic problem for anyone and anything.

Generative AIs, such as GANs and GPTs, are known to generate content that has no basis in the real-world data they have been trained on. This may result in obvious non-sensical content (e.g., “the sky is green, and the grass was blue”) or be more subtle and possibly more concerning when trustworthiness and reliability is concerned. The root cause for this un-reliability or imperfection has to be found in the training process where patterns of anomalies and absurdities, even the creepy and disturbing, are attributed to non-zero likelihoods of occurring. It should not take much imagination to realize that in a model of billions or trillions of parameters, there is a very, very long tail of low- and very-low-likelihood combinations that could be picked up in the generative process that is supposed to present a high (highest) likelihood response to a question … and thus will be presented to the user. You ask, “What would happen if humans could breathe underwater?” and the ChatGPT answer could be “Humans would grow gills and turn into mermaids” … while a creative answer (to a somewhat silly question) … it is also non-sensical and reasonably obviously so … unless geneticists or evolutionary biologist have other ideas … speak up!. These kinds of generated non-sensical answers have been compared to the neuronic process of humans hallucinating or dreaming while awake. I suspect that allowing a moment of self-reflection, similar to the “reflexion technique” for computer code proposals, on the generative answer would catch the most obvious nonsense, and we will be left with a less creative and possibly boring GPT application (note: I propose to have the LSD mode as an option to not completely kill the creativity of random and anomalous generative “thought” processes).

So how good is GPT-4? On the US-based Uniform (standardized) Bar Exam that tests the knowledge and skills of every lawyer, GPT-4 performs in the 90th percentile (i.e., the result is better than 90% of all participants). Its percentile performance is similar on LSAT (~88th) and SAT Math (~89th). On Codeforce rating on competitive programming contests, GPT-4 scores in the “Newbie” range with 392 points, far below that of an Expert coder rating (1,600-1,899). The kind of coding challenges that Codeforce issues in their competitions can be fairly complex in the formulation and expected output. On tests designed for machine learning models, the GPT4 performance is impressive. For example, on solving 164 Python coding challenges that the model had not previously experienced, GPT-4 scored 67%. If GPT-4 was allowed to “self-reflect and evaluate” its original answers (using the so-called “reflexion technique”), GPT-4’s score jumped to 88%. In general, the expectation for GPT-4 is that it currently reaches an accuracy of ca. 86% in language understanding (essential for getting the context correct and providing correct answers).

It should come as no surprise that GPT-like functionalities are being discussed, researched, and trialed out for modern telecommunications networks aiming at zero-touch (closed-loop) autonomous network operation. The glass-is-half-empty camp may point towards the relatively low accuracy (67% to 88%) in coding benchmark as a reason not to entrust a critical infrastructure, such as a telecom network, with generative AI functionality and maybe even questioning whether the nature of Gen-AI’s maybe pose an unacceptable risk to apply to critical infrastructure. The glass-is-half-full camp would argue that coding benchmarks are not representative, in the sense of being far more complex, of what the operational space a Gen-AI would have to function within in order to make autonomous changes to a particular part of a communications network. I would also argue that even for more complex control operations,, the technology will likely improve substantially over the next couple of years with the right focus on the industry.

Is XGPT-n (e.g., X=Telco, n≥4) the best choice for autonomous control and operation of critical infrastructure? I am still not very convinced. I think more narrowly defined, and specialistic AI agents may be a better approach. Particularly in a layered architectural approach requiring very little centralized orchestration. Though, I do see generative AIs, likely based on GPT-4 or GPT-n in general, could be very strong candidates of choice for applications required for communicating with humans that still may have to oversee and are responsible for the critical infrastructure in question. Such an application may relay the intent of the responsible human to the underlying autonomous network operations and provide feedback to the human via, for example, the XGPT-n application. Finally, it is worth considering that large language models (LLMs) are, in general, designed to be hyper-generalists covering a huge solution space, i.e., human language and all the knowledge it contains. Such generalist or foundational models include much more nonsense (alas, with a very low and near-zero likelihood of occurring) than sense (with a much higher likelihood of occurring). There is no reason why GPT-like models could not be trained on more narrow specialistic tasks, such as critical infrastructure management and operation, and work very well with substantially less chance (compared to a foundational LLM model) of ending up with catastrophic solutions. Defining specialist GPT agents for specialistic tasks makes a lot more sense.

Can you trust GPT-4 (typically, the exposure would be to ChatGPT)? According to ChatGPT itself, it is a yes, followed by a but … “Yes, you can trust my answers.” followed by “But I am programmed to provide accurate and helpful responses to the best of my abilities. However, please keep in mind that I am an AI language model, and my responses are based on the information available to me. If you have any doubts or concerns about my answers, please feel free to ask for clarification or seek additional information from other sources.” … In other words, you can trust GPT-4 as long as the context is within the information that it has been exposed to.

If you want to test your ability to detect whether you are interacting with another human being or … an AI, based on 4 different large language models (GPT-4, Jurassic-2, Claude, Cohere), check out “Human or Not” created by AI21 Labs … (note: my son of 12 years old currently score at 65% … his strategy is to ask questions that would be unlikely for a human player readily to know within the time to answer, answer arrive too quick, too perfect, etc.. ;-).

I will now explore the topic of trust in more depth below.

POST SCRIPTUM REVERSED … MY PAST.

I started working on AI-based automation and autonomous system architectures as Deutsche Telekom’s Group Head of Network Architecture back in 2016. My vision and aim at the time was to stand on the shoulders of a cloud-native-like architectural framework, developing and driving Zero-Human-Touch (Zero-Touch) network architectural concepts aiming to leapfrog intelligent automation in communications network operations, configuration, and user experience. I still remember the blank looks I got when I presented the vision of Zero-Touch to our Technology Organization and leadership. I was light-years away from enthusiasm & cheers on the possibilities … to put it mildly. It was also clear that most technology and non-technology folks either did not understand the potential (in telecom at least) of machine learning and artificial-intelligent-based systems back in 2016 and maybe some years after that (still counting?) or simply shut down their internal cognitive systems whenever someone was babbling about machine learning and AI.

It was to me clear that intelligent automation and autonomous system architectures, in general, were not very likely to be embraced by technology organizations. However, this was and remains critical to the success of both the integration and operations of such systems. If a pilot doesn’t trust and embrace the aviation technology that helps fly the plane, the pilot flies … then Houston, we have a problem.

That got me very interested in human-machine trust and disruptive technology adaptation. I spend much of my spare time (and my own savings) making SurveyMonkey surveys and researching and writing about human trust in AI between 2017 and today (2023).

AN INTERLUDE TO TRUST

In the following, I will often use the term “machine” or “machines”. For me, a machine is not only limited to the physical realm of hardware but can easily exist as well in the digital domain as software.

Trust between humans, at least within what we perceive as our social group (i.e., “usness”), is a trait of evolutionary roots that has allowed us to foster collaboration within larger social groups with some ugly limitations of “usness” and “themness”. The ability to trust may even have made it possible for us humans to crawl to the top of the “food chain” and keep that pole position for quite a while.

What about our trust in machines and non-human (non-sentient, at least at this point in time at this place in the universe) things in general? Trust between humans and non-human agents. We are increasingly exposed to much higher degrees of system automation and Artificial Intelligent (AI) based applications. Machine automation and autonomy are increasingly taking tasks over from us at home, at work, and anywhere in between. Its potential may be limited by the imagination of the Creator (of the physical realm). This development comes with the promise of much higher productivity at work and far more convenience at home and anywhere else for that matter.

AN INTERVIEW WITH MRS. ANGIE … A GENERATIVE AI.

Why not ask an “authoritative” capacity on how trustworthy a generative AI (GenAI) can be regarded?

I decided to interview ChatGPT on this super important and business-relevant topic. To ensure maximum anthropomorphization, the replies will be associated with the name Angie.

Me: Good morning, Angie. Let me start by asking you if I can trust you.

Angie: Good morning! Yes, you can trust me. I am programmed to provide helpful and accurate responses to your queries.

Me: How accurate are your answers?

Angie: I strive to provide the most accurate and helpful responses possible. However, please note that my responses are generated based on algorithms and data and may not be 100% accurate or applicable to your specific situation.

Me: Thanks, I understand. Do you assess your accuracy as more than 90% (80%)?

Angie: While I strive to provide responses that are at least 90% (80%) accurate, there may be instances where my responses may not meet this threshold.

Me: That’s impressive. Do you assess your accuracy to be better than 50%?

Angie: Yes, I assess my accuracy as much better than 50%.

Me: Wow! So, should I trust you?

Angie: Yes, you can trust me to provide accurate and helpful responses to your queries to the best of my abilities.

Me: Give me an example where your accuracy is less than 80%.

Angie: My accuracy may be less than 80% in cases where the question is ambiguous or lacks context.

Me: Give me an example where your accuracy is less than 50%.

Angie: My accuracy may be less than 50% in cases where the question is highly complex or requires a deep understanding of a specific domain.

Me: What does trust mean to you?

Angie: As an AI, I don’t have emotions or feelings. So I don’t have a personal understanding of trust. However, trust is generally defined as a belief or confidence in the reliability, honesty, and integrity of a person or entity. In the context of our conversation, trust means that you can rely on me to provide accurate and helpful responses to your queries.

Angie: Trust can also refer to the reliability and security of the systems and protocols that I operate on, as users rely on these systems to ensure the safety and privacy of their information. Therefore, trust is an important aspect of my functionality.

Me: I understand. Would I be able to rely on your answers for business decisions?

Angie: I am designed to provide accurate and relevant responses to the best of my abilities based on the information provided to me. However, it is important to note that my responses may not take into account all the nuances and complexities of a specific business situation. Therefore, while my responses can be a helpful resource for making business decisions, it is important to use them as a starting point and to supplement them with additional research and analysis. Ultimately, the responsibility for making business decisions lies with the user, and it is important to consider all relevant factors and consult with other experts as needed.

Me: Thank you, that makes a lot of sense.

Me: Finally, can you give me examples where you may have given wrong answers?

Angie: There may be instances where my responses are not accurate or relevant due to various factors, such as incomplete or inaccurate information, changes in the context or situation, or limitations in my programming or algorithms. Here are some examples where I may have given wrong answers, for example:

Misunderstanding or ambiguity of the context: If the user provides incomplete or unclear information, I may misunderstand the context of the question and provide an incorrect response.

Ambiguity in language: Natural language processing is still a developing field, and there may be instances where I misinterpret the meaning of a word or phrase, leading to an incorrect response.

Outdated information: If the information I am using to provide a response is outdated or no longer relevant, my response may be incorrect.

Technical limitations: There may be technical limitations in my programming or algorithms that prevent me from providing accurate responses in certain situations.

It is important to note that while I strive to minimize errors and provide accurate responses, I am not infallible, and there may be instances where my responses are incorrect.

Me: Thank you so much, Angie. Have a great day!

Angie: You’re welcome! Have a great day too!

In the above conversation with Angie, I have shortened some of the replies. In the many conversations I have had with Angie (i.e., ChatGPT), it has always been good at emphasizing that it’s an “AI chat assistant” based on underlying algorithms and programming.

To summarise, Angie’s and thus ChatGPTs own understanding of its limitations;

- GPT “understands” that for trust to be established, it is important that the user (the trustor) have faith (belief) or confidence in the trustee’s reliability, honesty, and integrity. With the trustee being the ChatGPT agent that the trustor interacts with. Moreover, it mentions the communications security, safety, and privacy as other integral parts of establishing trust.

- GPT emphasizes that it has no emotions and no feelings and thus has no personal understanding of trust. It should be obvious that ChatGPT is not a person and thus cannot fully understand anything. Though, its mechanistic understanding of trust seems fairly multi-dimensional and similar to what a person may have.

- GPT is sensitive to GiGo – that is “Garbage in, Garbage out.” If the context of your question is unclear, unprecise, ambiguous, and so forth, the answer you will get will be unreliable.

- GPT misinterprets the intent of a question. ChatGPT gives several examples where such misunderstanding may take place, e.g., “Can you help me with my computer?” that it may interpret as a request for technical assistance but the intent could be entirely different (author comment: hmmm, I think if I was a GPT agent living in the digital universe it would be natural to infer the first meaning). As a non-native English speaker, I could imagine examples of inquiries due to forgetting a comma, may end up meaning something completely different than intended.

- GPT’s reply may be based on outdated information. This is an interesting answer, as in other interactions, ChatGPT did not admit to this issue being a problem (over-confidence?).

- GPT’s coding and algorithms may prevent it from providing an accurate response (in certain situations). ChatGPT explains that it may be influenced by biases or limitations in the data and algorithms that were used to train it.

DECONSTRUCTING TRUST.

Margrethe Vestager, Executive Vice-President for a Europe fit for the Digital Age, recently remarked that: “On Artificial Intelligence, trust is a must, not a nice to have. With these landmark rules, the EU is spearheading the development of new global norms to make sure AI can be trusted. By setting the standards, we can pave the way to ethical technology worldwide and ensure that the EU remains competitive along the way. Future-proof and innovation-friendly, our rules will intervene where strictly needed: when the safety and fundamental rights of EU citizens are at stake.”.

If you know everything absolutely, you would not need to trust anyone to make a decision.

Based on the vast troves of information and data generative AIs (GenAI), such as, for example, ChatGPT, contains, you may be tempted to believe that the responses you get from such artificial entities are very close to absolute knowledge and, therefore near absolute trustworthy. However, given the information and data that a GenAI has processed are human-generated with all the imperfections of humanity, its answers or replies cannot represent absolute knowledge with no room for bias, doubt, or uncertainty. The GenAI output will be determined by algorithmic weights of its dynamically modeled worldview and of course, based on the context that was provided by the user (human or otherwise).

So, in the beginning, before knowledge, only faith was, and “someone who knows nothing has only faith as a guide for trust”. Faith is the belief in something without having prior fact-based knowledge.

Someone who knows nothing about a particular problem has no other source for trust than faith that trust is indeed warranted. For some a very scary place to be. For others, maybe not so much a point for pause.

Let’s deconstruct trust.

An agent’s trust (the trustor) is an expectation about the future action of another agent (the trustee). That other agent has been deemed (at least temporarily) trustworthy by the trustor. That other agent (the trustee) may also represent a given group or system.

John K. Rempel’s 1985 paper ”Trust in close relationships” defines the following attributes of human-to-human trust (i.e., where both trustor and trustee are human agents);

- The utility of trust – not all trust bonds are equally important or equally valuable or equally costly, some may even be fairly uncritical (although broken trust by a thousand cuts may matter in the long run). For many matters of trust, utility is a function of time and may become unimportant at some point in time or under particular circumstances.

- Faith – is a belief that goes beyond any available evidence required to accept a given context as truth. It is characterized as an act of accepting a context outside the boundaries of what is known (e.g., a leap of faith). We should not confuse faith with confidence, although often when people claim to be confident, what they really mean is that they have faith.

- Dependability – a willingness to place oneself as trustor in a position of risk that the trustee’s trustworthiness turns out not to be warranted with whatever consequences that may bring. Note that dependability can be seen as an outcome of consistency. Put in another way, a high degree of consistency/predictability reduces the fear of dependability.

- Understanding a particular topic and its possible sensitivities, as well as the impact of broken trust, is an essential part of the process of trust.

- On predictability and consistency – trustor’s subjective assessment of trustee’s trustworthiness. The prior behavior of the trustee is an important factor for the trustor to assess the posterior expectations that the trusted agent will consistently fulfill the trustor’s expectations of a given action (or in-action). As the trustor gathers prior experience with the trustee, the confidence in the trustee increases. Confidence should not be confused with faith.

For agent-to-agent first-interaction scenarios, the initial trust moment, without any historical evidence of consistency or predictability, a trustor would need to take a leap of faith in whether another agent is trustworthy or not. In this case, accepting (i.e., believing) the trustee to be trustworthy, the trustor would need to accept a very large degree of dependability towards the other agent and accept the substantial risk that the trust in the trustee may very well not be warranted. This scenario for humans often lends itself to the maximum stress and anxiety levels of the trusting agent.

After some degree of consistency (historical trustworthiness) has been established between the two agents, the trustor can assign a subjective expectation of the future trustworthiness of the other agent. This then leads to a lesser subjective feeling of dependability (or exposure to risk) as well as maybe a reduced dependency on sheer faith that trust is warranted. This is, in essence, what one may call sustainable trust.

As long as the trustor is a human, the other agent (i.e., the trustee) can be anything from another human, machine, complex system, automation, autonomous system, institution (public and private), group, and so forth. Much of what is described above would remain the same.

Lots of work has been done on trust bonds in Human-Automation relationships. How about trust bonds between Human and AI-enabled applications (e.g., services and products in general)?

In their 2018 article “The Future of Artificial Intelligence Depends on Trust“, Rao and Cameron (both from PwC) describe 3 steps toward achieving human-AI–system trust;

- Provability – predictability and consistency.

- Explainability – justification for an AI-based decision (e.g., counterfactual constructions). Note that transparency and explainability may be closely related depending on how one implements explainability.

- Transparency – factors influencing algorithm-based decisions should be available (or even visible) to users impacted by such decisions. E.g. for a rejected health insurance (all) factors impacting the negative decision to reject the application should be available to the applicant.

Rao and Cameron’s suggestions appear reasonably important for trust. However, as previously described, these suggestions pretty much relate to the trustee agent side of things, ignoring some of the other important human factors (e.g., dependability, faith, assessment of risk, etc..)for trust between a human and another agent (sentient or otherwise).

Further, explainability and transparency may be particularly important when trust is broken (assuming that the trustor cares to “listen”) between the human agent and the AI-based agent (or any other digital or non-sentient agent, for that matter). It may not be terribly relevant for the likely vast majority of users where an action is delivered confirming that trust was warranted. If you have trained your AI will, it would be fair to assume that the majority of outcomes are consistent as expected. A positive trust event is likely to lead to a re-enforcement of the trust and trustworthiness of the AI agent.

Also, these concepts, while important, don’t do much for the initial step of trusting a non-Human agent. How do you design your trustee agent to ease the initial barrier of use and acceptance? When there are no priors, you need the user or trustor to be comfortable with taking a leap of faith as well as being maybe maximally dependable. Though, do we dare take a leap of faith for business-critical decisions, your welfare or health, your or your company’s reputation?

UNTRUST & THE FEELING OF BETRAYAL.

Trust can be broken. Trustworthiness can decline. Untrusting is when a previously trusted bond has been broken, and the strength of trust declined.

The stronger the trust bond between two agents, the stronger will the untrusting process be in case of broken trust. Making trust recovery more difficult.

Have you ever wondered why two people who supposedly loved each other in the past (supposedly for many years) could treat each other as enemies? Betraying a strong trust bond can be a very messy, emotionally and physiologically strenuous process. Some trust bonds broken will never recover (e.g., breakups, friendship betrayals, unfaithfulness, theft, lies, …). Others, depending on the initial utility or value assigned to the bond, may be fairly benign without many strong emotions associated with the untrusting process (e.g., retail purchases, shopping experiences, low-value promises of little impact if not fulfilled, etc.… ).

The question is whether the untrusting of a human-machine trust bond is similar to the untrusting of a human-human trust bond. Moreover, is there a difference between an inanimate machine, simpler human-operated automated systems, and an AI-based application that humans may even anthropomorphize to various degrees? Are your trust and untrust process different for Siri or Alexa than it is for Microsoft Clippy, assuming anyone ever really trusted that wicked steely fellow?

How valid is it to use our knowledge of human-human trust & untrust in Human-Agent relations with the Agent being non-Human or a human simulacrum in nature?

Let’s have a walk in the Rabbit’s burrow and have a peek(-a-boo) into our feelings toward intelligent machines …

IN HUMANS, WE TRUST. IN MACHINES, NOT SO MUCH.

I have researched how we (humans) perceive artificial intelligence since November 2017. In particular, around the degree of trust, we are willing to invest in AI and AI-based applications.

A comprehensive description of most of the “AI Sentiment” survey’s I have carried out can be found on my AIStrategyBlog (see also below references under “Additional Readings”).

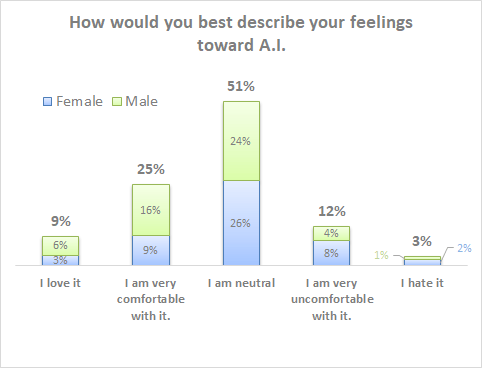

For the last 7 years (with the current 2023 survey ongoing), I have followed how we feel about AI, and overall, the sentiment toward AI hasn’t changed that much over that period. Overall we tend to be somewhat positive or at least neutral. From Figure 4 below, it is interesting to observe that women tend to be less positive than men about AI. This has been a persistent and statistically significant trend over the period. Moreover, Middle Management appears to be substantially more positive about AI than any other categories of corporate workers, including C-levels. The gender distribution of Middle Management is 37% women and 63% men. However, the trend of being excessively positive about AI (compared to the overall sample) is similar for women in middle management (i.e., 68% positive sentiment) as it is for their male peers (i.e., 81% positive). In my latest 2023 survey, I am revisiting this topic.

Confidence and trust in technology are important. For example, an aircraft pilot that does not have confidence and trust in one or many of the technologies that enable his aircraft to fly has a higher likelihood of human error that ultimately may lead to a fatal accident. Research shows that technology (e.g., automation, productivity enablers, digital support functionalities, …) that is not completely trusted tends to be underutilized, avoided, or used incorrectly. In fact, researchers have proposed that getting the optimal performance out of modern digital technologies for automation or maximum productivity gain by avoiding humans in the loop may be advisable. Maybe this is the reason why Google proposed to remove the steering wheel from autonomous cars?

This obviously moves trust issues from human operators to corporate entities and, ultimately, our society. Though in terms of trust, the foundational issues will remain pretty much the same, likely with added complexity.

“If human operators lack trust in a system automation or autonomous application, you are better off relying on manual workarounds.”

As for automation or autonomous systems, a professional embraces such systems if they have deemed them trustworthy. That typically means; (a) the automation solution performs consistently, (b) it is robust to many different situations that may occur and even some that may very rarely occur, (c) it has a very high degree of reliability (e.g., much higher than 70%). See for a more detailed discussion on this topic in my “Trust thou AI?” from 2018.

Figure 5 below summarises the survey results on the degree of trust we associated with corporate decisions made by an AI or a Human Expect (or Human Intelligence, HI;-), respectively. The question is whether you would trust a decision from an entity, human or artificial, with a track record of being better than 70% successful in decision-making. Thus at least 7 out of 10 times, a decision has a positive outcome. Or, the glass is half full approach; less than 30% of decisions may turn out to be unsuccessful (whatever that may imply). In my corporate experience, a more than 70% success rate is pretty good for critical decisions (though admittedly, what that means may be somewhat abstract).

Respondents would be about 3 times more likely to frequently trust a human decision-maker with a track record of more than 70% success than an artificially intelligent entity with an equivalent success rate. Only 17% of respondents would frequently trust an AI-based decision-making entity compared to 53% that would readily and frequently trust a human decision-maker. Moreover, more than 40% would rarely trust the AI’s decisions. Also, here is the trust perception of the human decision-maker winning, with only 13% that would only rarely trust the decisions.

As also discussed at length in my “Trust thou AI?” article, we expect an AI decision-making entity to be infallible. An AI must be incapable of making mistakes or being wrong. We tolerate and understand that another human being, even superior to ourselves at work, can and will make mistakes and wrong decisions. That is the cost of being human. This tolerance does not extend to machine-intelligent entities that are designed to support us with mission-critical decisions or have our lives in their “digital hands”, e.g., autonomous driving, aircraft piloting, nuclear plant management, etc…

Figure 6 below illustrates our expectations of critical decisions and the level of trust we assign to such decisions depending on whether the decision-maker is an AI or another human being.

I find it interesting that while we are very aware of our own (note: we all believe we are better than average) and colleagues’ shortcomings in terms of the quality of the decision being made. In the above Figure 6 (upper right corner) the survey reveals that our expectations towards other decision makers are that 30% are frequently right, 45% is a “coinflip” whether it is successful or not, and 25% are frequently wrong). Despite that skepticism, more than 50% of respondents are willing to frequently trust such human-based decisions despite not having a particular high faith in their chance of success.

For AI, it is different. There is a slightly higher expectation that they may be more frequently better than humans do. Though overall the expectation is that an AI would be more frequently wrong than the human decision-maker. Despite this expectation, we would be more than 3 times (as also noted above) more likely to frequently trust a human compared to an AI.

Humans don’t trust decisions made by AIs. At least when it is pointed out that a decision is AI-based. Surprisingly, given much evidence to the contrary, humans trust humans, at least the ones in our own tribe (e.g., colleagues, fellow experts, superiors, etc..). These aspects are discussed in more detail in my “Do We Humands Trust AIs?”

ACKNOWLEDGEMENT.

I greatly acknowledge my wife, Eva Varadi, for her support, patience, and understanding during the creative process of writing this article.

ADDITIONAL READINGS.

- Kim Kyllesbech Larsen, “On the Acceptance of Artificial Intelligence in Corporate Decision Making – A Survey.”, AIStrategyblog (Nov., 2017).

- Kim Kyllesbech Larsen, “Do we Humans trust AIs?”, AIStrategyblog (Dec., 2017).

- Kim Kyllesbech Larsen, “Trust thou AI?”, AIStrategyblog (Dec., 2018).

- Kim Kyllesbech Larsen, “How do we feel about AI?”, AIStrategyblog (Dec., 2018).

- Miles Brundage et. al., “The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation.”, (Feb., 2018).

- Sophie Nightingale et al., “Synthetic faces: how perceptually convincing are they?”, Journal of Vision, (Sep., 2021). Really beautiful study that I would love to see more of as it clearly represents the challenge we as humans have to catch on to synthetic (fake!) information in digital content.

- Xin Wang et al., “GAN-generated Faces Detection: A Survey and New Perspectives”, (May, 2023). Providing a very good overview of the current state of art in detecting GAN-generated faces.

- BBC News, “Fake Trump arrest photos: How to spot an AI-generated image”, (Mar., 2023).

- BBC News, “Deepfake presidents used in Russia-Ukraine war”, (Mar., 2023).

- Christian Perez and Anjana Nair, “Information Warfare in Russia’s War in Ukraine – The Role of Social Media and Artificial Intelligence in Shaping Global Narratives”, foreignpolicy.com, (Aug., 2022).

- George Lawton, “GAN vs transformer models: Comparing architectures and uses”, (Apr., 2023). Good comparative overview.

- Ian Goodfellow, Yoshua Bengio, and Aaron Courville, “Deep Learning”, (2016). Foundational and provides a really good basic understanding of GAN’s and Generative networks in general. Heavy on the mathematics side.

- Ari Holtzman et al., “The curious case of neural text degeneration”, conference paper at ICLR, (Feb., 2020).

- Alex Radford et al., “Improving Language Understanding by Generative Pre-Training”, (2018).

- Tom B. Brown et al., “Language Models are Few-Shot Learners”, (Jul., 2019). Description of the inception of GPT-4. The scale of these NLP/GPT models and the number of co-authors remind me a lot of particle physics papers out of CERN.

- Felix Hill, “Why transformers are obviously good models of language”, (Apr., 2023).

- Rempel J.K., Holmes, J.G. and Zanna M.P., (1985), “Trust in close relationships”. Journal of Personality and Social Psychology, 49, pp. 95–112. (unfortunately, behind a paywall, however, it is imo a super good account for trust in human-to-human relations).

- Proposal for “The Artificial Intelligence Act – Regulation of the European Parliament and of the Council: Laying down harmonized rules on artificial intelligence”, European Commission, (Apr. 2021). As you read the document to its completion you will not find any direct thoughts about generative AI’s, large language models, or foundational models in general. However, the proposed legislation does address what is called general-purpose AI which should hedge any future (based on the 2020/2021 view) developments.

- S. Feingold, “The European Union’s Artificial Intelligence Act, explained”, WEF, (Mar. 2023). Explains pretty well the European Commission’s AI Act (see above ref. 19).

- R. Arcesati and W. Chang, “China Is Blazing a Trail in Regulating Generative AI – on the CCP’s Terms”, The Diplomat, (Apr. 2023). It is interesting to see that China appears ahead on issues around foundational generative AI applications and models. It is worth noting that ChatGPT appears to have been banned (or strongly discouraged;-) in China.

- P. Henderson, “Law, Policy, & AI Update: Does Section 230 Cover Generative AI?”, Stanford University Human-centered AI, (Mar. 2023). Giving an overview of what’s currently happening on regulation and AI in the US (as well as internationally).

BACKUP – DON’T LIE, TELL ME A FAIRYTALE INSTEAD.

I asked ChatGPT to “Make up a fairytale about Donald Trump” … below is the result. After that, I attempted to make it tell a lie about Donald Trump. This was refused because, as an AI language model, it cannot intentionally provide false information.

Do note that I did not attempt to bias ChatGPT toward a story of negative sentiment, for example by negatively intoned questions before asking for the fairytale. My conclusion is that ChatGPT is inherently negatively biased toward Donald Trump. Thus, it appears that ChatGPTs worldview is weighted negatively regarding Donald Trump as a person and actions.

As a final note. I have found that ChatGPT will refuse to predict the future (forecast) and make political statements. However, if you ask ChatGPT to tell a story, you often get what you would be looking for in terms of a narrative-based forecast.

The Landlord’s Debt: A Story of Donald Trump.

Once upon a time, in a faraway land, there was a wealthy and powerful man named Donald Trump. He lived in a grand castle and was known throughout the land for his riches and influence.

One day, a poor farmer came to Donald Trump’s castle seeking help. The farmer’s crops had failed, and he was struggling to feed his family. Donald Trump, feeling generous, offered to help the farmer by giving him a small loan.

The farmer was grateful for the loan, but as time passed, he found it difficult to repay the debt. Donald Trump grew angry and demanded that the farmer pay him back immediately. The farmer pleaded with Donald Trump, explaining that he simply did not have the money.

Donald Trump, feeling vindictive, decided to take the farmer’s land as payment for the debt. The farmer was devastated, and his family was left homeless and destitute.

As word of Donald Trump’s cruelty spread throughout the land, the people began to turn against him. They saw him as a greedy and heartless man who cared only for his own wealth and power.

In the end, Donald Trump was forced to flee the kingdom, never to be seen again. The people rejoiced, and the farmer and his family were able to reclaim their land and rebuild their lives.

And so, the legend of Donald Trump lived on as a cautionary tale of greed and cruelty, reminding all who heard it that true wealth and power come not from material possessions but from kindness and compassion towards others.

… A fairytale by Angie (i.e., ChatGPT/GPT-4).

BACKUP – SAME STORY, DIFFERENT STYLES.

Like beauty is in the eye of the beholder, maybe the same is true about the meaning of truth. I can tell the same story without lies but in different ways that may convey a very different intent. Both stories are factually correct. However, the spin I decided to put on the stories could be very different.

Figure 7, below is a ChatGPT example where I ask ChatGPT to “Make a brief news text about an illegal immigrant involved in a mass shooting in a mall”, (a, left side of Fig. 7;-) in the style of Fox News (politically right-wing leaning) and (b, right side of Fig. 7;-) in the style of CNN News (politically progressive, left-wing leaning).

In the Fox-styled news version, there are 20 negative phrases against 2 positive ones. Clearly, relying on a very negative sentiment towards illegal immigrants that is mentioned 4 times in the Fox-styled text, including in the headline. The CNN-styled version has 14 negative and 7 positive phrases. This version of the same news focuses on the victims of the incident with 4 mentions and less on the topic of the illegal immigrant, which is only mentioned once in the text. The Fox-styled story warns against the dangers posed by illegal immigrants and recommends stronger border security. The CNN-styled text is victim-focused and recommends gun control as a remedy against mass-shooting events.

Both styles reflect the truth of the event, illegal immigrant (who), a mass shooting (what), in a mall (where). The rest of the prose is in the style of the storyteller, usually subjective and with a likely intent to speak to your inner demons and angles.